Load balancing Ceph Object Gateways

Benefits of load balancing Ceph Object Gateways (RGW)

Load balancing Ceph Object Gateways (RGW) provides High Availability, enhanced scalability, and improved performance:

- High Availability (HA): Load balancing ensures that the Ceph Object Gateway service remains accessible, even if individual RGW daemons or nodes fail. The load balancer continuously performs health checks on all RGW instances. If an instance becomes unresponsive, the load balancer automatically detects the failure and reroutes client requests to the remaining healthy gateways. This prevents a single point of failure and maintains continuous service availability for S3 and Swift clients. You can take individual RGW nodes offline for maintenance, upgrades, or configuration changes without impacting the overall service. The load balancer simply directs all traffic away from the node being serviced, ensuring zero downtime for your object storage application.

- Scalability: Load balancing makes it easy to handle growing traffic demands by allowing you to add more resources effortlessly. You can add new RGW instances or physical servers on demand to increase the capacity of your object gateway service. The load balancer automatically integrates these new resources and distributes the incoming traffic to them, providing near-linear scaling of your front-end capacity. By distributing the client load, load balancing prevents any single RGW from becoming a centralized bottleneck, allowing the Ceph Object Gateway to handle massive numbers of concurrent requests and high-throughput workloads as your data and user base grow.

- Improved performance: By distributing requests evenly, load balancing maximizes the efficiency of your deployed resources, which leads to better performance. Incoming S3/Swift requests are spread across multiple RGW instances using various algorithms (like least connections or round-robin), ensuring that no single gateway is overwhelmed. This prevents resource exhaustion on any one server. A balanced load ensures that each request is processed by an optimally utilized RGW daemon, leading to lower latency for object storage operations (like PUTs and GETs) and a better overall user experience.

About Ceph Object Gateways (RGW)

Ceph is a free and open-source object storage solution. It provides for the building of massively scalable and decentralised storage clusters, up to petabytes or exabytes of data, using standard commodity hardware. At the heart of Ceph is its Reliable Autonomic Distributed Object Store (RADOS) which provides the underlying storage functionality.

Ceph provides three interfaces for accessing its RADOS storage cluster: the Ceph File System (CephFS), which presents storage as a traditional POSIX-compatible file system; the Ceph Block Device (RBD), which allows storage to be mounted as a block device; and the Ceph Object Gateway (RGW), which provides S3 and Swift-compatible API access, which is the subject of this page.

Ceph Object Gateway is the common, descriptive name for the object storage interface within a Ceph cluster.

RGW is the common abbreviation for the daemon (service) that implements the Ceph Object Gateway and stands for Rados Gateway. So essentially, RGW is the software component that runs to provide the Ceph Object Gateway service.

The RGW daemon acts as a RESTful HTTP server, providing compatibility with two popular object storage APIs:

- Amazon S3 API: This allows existing S3-compatible applications to seamlessly interact with your Ceph storage cluster.

- OpenStack Swift API: It also provides compatibility with a large subset of the OpenStack Swift API.

The RGW processes handle all client-facing requests, translating them into operations on the underlying Ceph storage system, which is the Reliable Autonomic Distributed Object Store (RADOS).

Why Loadbalancer.org for Ceph Object Gateways (RGW)?

Loadbalancer’s intuitive Enterprise Application Delivery Controller (ADC) is designed to save time and money with a clever, not complex, WebUI.

Easily configure, deploy, manage, and maintain our Enterprise load balancer, reducing complexity and the risk of human error. For a difference you can see in just minutes.

And with WAF and GSLB included straight out-of-the-box, there’s no hidden costs, so the prices you see on our website are fully transparent.

More on what’s possible with Loadbalancer.org.

How to load balance Ceph Object Gateways (RGW)?

The load balancer can be deployed in 4 fundamental ways: Layer 4 DR mode, Layer 4 NAT mode, Layer 4 SNAT mode, and Layer 7 Reverse Proxy (Layer 7 SNAT mode).

When load balancing Ceph Object Gateways, using Layer 4 DR mode or Layer 7 Reverse Proxy is recommended.

It is also possible to use Layer 4 TUN mode or Layer 4 NAT mode in specific circumstances, however these load balancing methods come with many caveats and should only be considered if both Layer 4 DR mode and Layer 7 Reverse Proxy have been discounted.

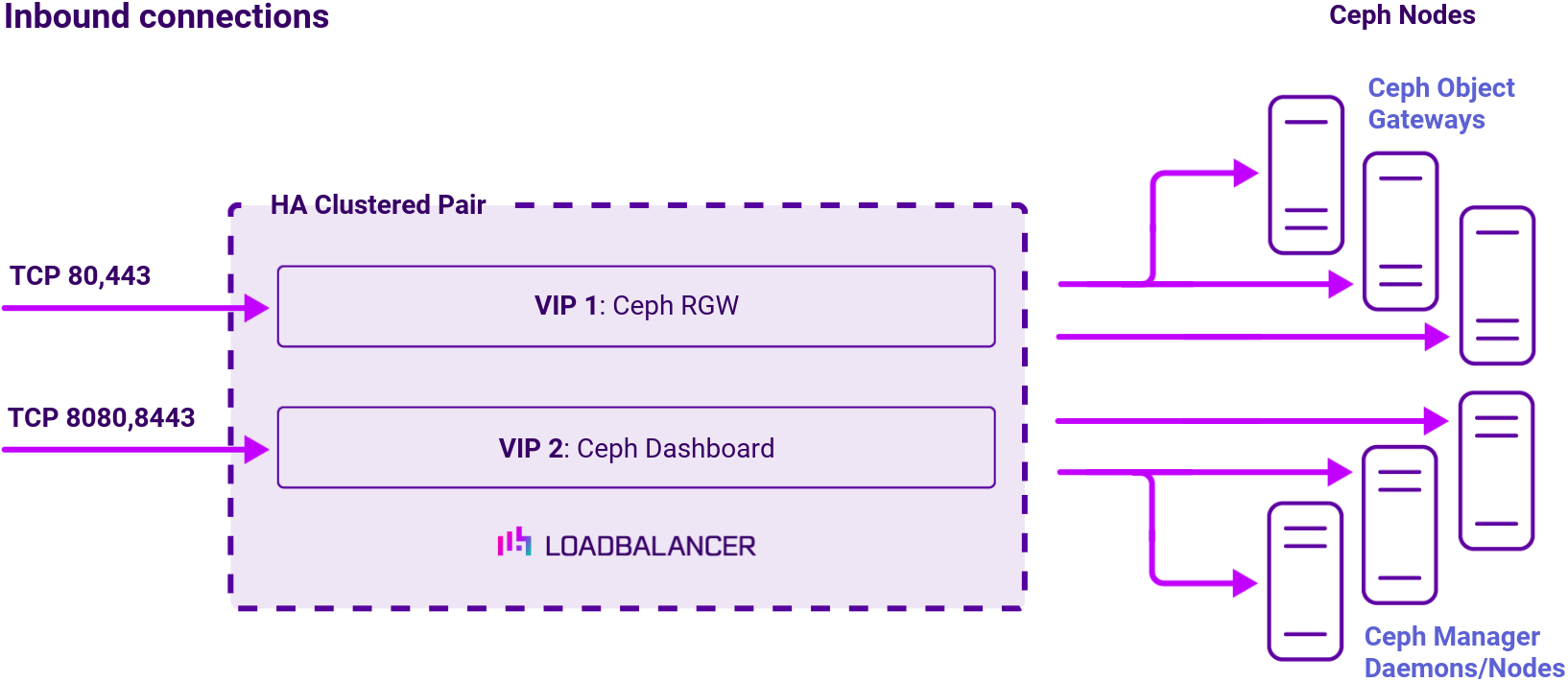

Virtual service (VIP) requirements

To provide load balancing and HA for Ceph Object Gateways, one VIP is required:

- Ceph RGW

Optionally, an additional VIP can be used to make the Ceph Dashboard highly available from a single IP address, if the optional dashboard module is in use:

- Ceph Dashboard

Load balancing deployment concept

Note

The load balancer can be deployed as a single unit, although we recommend a clustered pair for resilience and high availability.

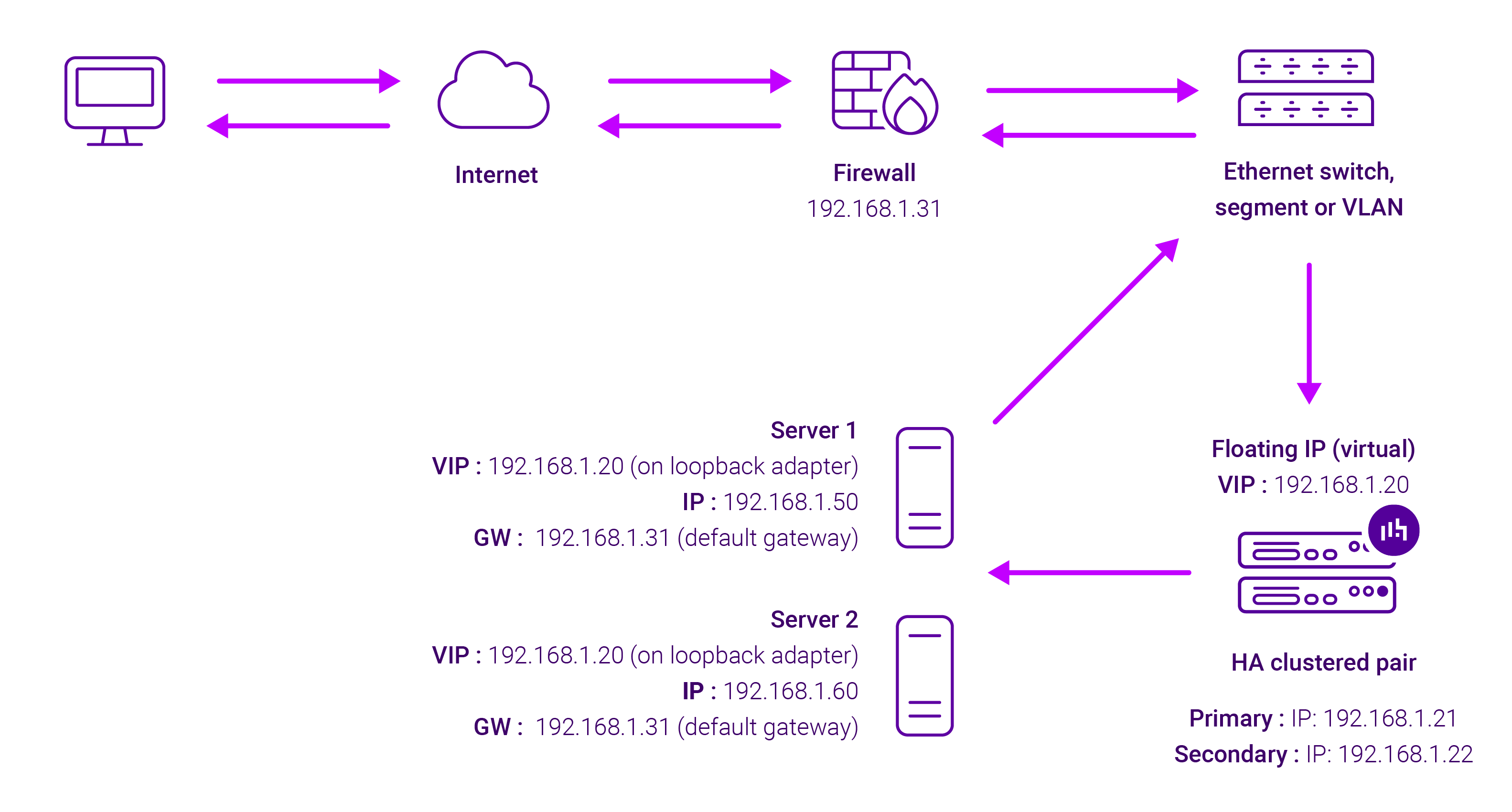

About Layer 4 DR load balancing

One-arm direct routing (DR) mode is a very high performance solution that requires little change to your existing infrastructure:

DR mode works by changing the destination MAC address of the incoming packet to match the selected Real Server on the fly which is very fast.

When the packet reaches the Real Server it expects the Real Server to own the Virtual Services IP address (VIP). This means that you need to ensure that the Real Server (and the load balanced application) respond to both the Real Server’s own IP address and the VIP.

The Real Servers should not respond to ARP requests for the VIP. Only the load balancer should do this. Configuring the Real Servers in this way is referred to as Solving the ARP problem.

On average, DR mode is 8 times quicker than NAT for HTTP, 50 times quicker for Terminal Services and much, much faster for streaming media or FTP.

The load balancer must have an Interface in the same subnet as the Real Servers to ensure Layer 2 connectivity required for DR mode to work.

The VIP can be brought up on the same subnet as the Real Servers, or on a different subnet provided that the load balancer has an interface in that subnet.

Port translation is not possible with DR mode, e.g. VIP:80 → RIP:8080 is not supported. DR mode is transparent, i.e. the Real Server will see the source IP address of the client.

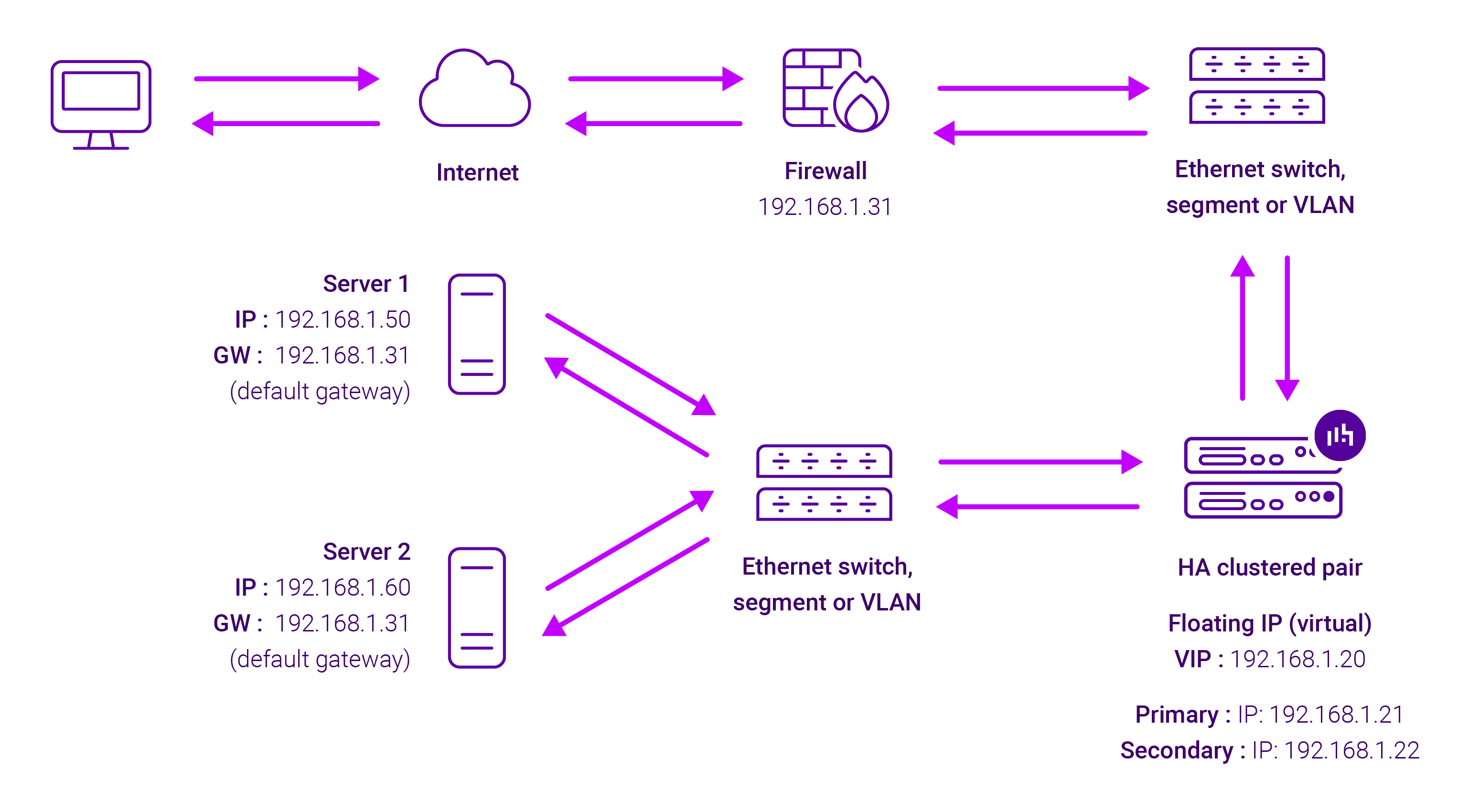

About Layer 7 Reverse Proxy load balancing

Layer 7 Reverse Proxy uses a proxy (HAProxy) at the application layer. Inbound requests are terminated on the load balancer and HAProxy generates a new corresponding request to the chosen Real Server. As a result, Layer 7 is typically not as fast as the Layer 4 methods. Layer 7 is typically chosen when either enhanced options such as SSL termination, cookie based persistence, URL rewriting, header insertion/deletion etc. are required, or when the network topology prohibits the use of the Layer 4 methods.

The image below shows an example Layer 7 Reverse Proxy network diagram:

Because Layer 7 Reverse Proxy is a full proxy, Real Servers in the cluster can be on any accessible network including across the Internet or WAN.

Layer 7 Reverse Proxy is not transparent by default, i.e. the Real Servers will not see the source IP address of the client, they will see the load balancer’s own IP address by default, or any other local appliance IP address if preferred (e.g. the VIP address).

This can be configured per Layer 7 VIP. If required, the load balancer can be configured to provide the actual client IP address to the Real Servers in 2 ways. Either by inserting a header that contains the client’s source IP address, or by modifying the Source Address field of the IP packets and replacing the IP address of the load balancer with the IP address of the client. For more information on these methods, please refer to Transparency at Layer 7 in the Enterprise Admin Manual.