Load balancing Evertz Mediator-X

Benefits of load balancing Evertz Mediator-X

The key benefits of load balancing Evertz Mediator-X are:

- Optimized performance: Load balancing intelligently and efficiently distributes user traffic and application requests amongst Mediator-X servers. This prevents any single server from becoming overloaded, ensuring the entire platform runs smoothly, offering the best possible user experience, leading to faster response times for media workflows.

- High Availability (HA): Load balancing provides redundancy and fault tolerance. By constantly performing health checks on the servers, a load balancer can quickly detect if a server becomes unavailable or too busy and redirect traffic to the remaining healthy servers. This minimizes downtime and mitigates single points of failure, ensuring continuous operation for mission-critical broadcast and media services i.e. keeping the show on air!

- Scalability: Load balancing makes it straightforward to scale Mediator-X infrastructure. As demands on the platform increase (e.g. more users, more channels, greater throughput), new application servers can be added to the resource pool. The load balancer automatically incorporates these new servers, efficiently distributing the increasing workload across the expanded capacity.

About Evertz Mediator-X

Evertz Mediator-X unifies content acquisition, content processing, media management, production, playout, and delivery into a single, integrated environment. The unification of these services on a single platform delivers optimized media workflows and increased operational efficiency.

Built on over fifteen years of Mediator product development and deployment expertise, Mediator-X has a modern, scalable, infrastructure-agnostic architecture which can be deployed in public cloud, private cloud, or hybrid environments, enabling users to be flexible with their deployment strategies and to grow the platform wherever the business case dictates.

Why Loadbalancer.org for Evertz Mediator-X?

Evertz, a global leader in media and entertainment technology, specifically endorses Loadbalancer.org’s Application Delivery Controller (ADC) appliances for providing high availability (HA), resilience, and performance optimization for their Mediator-X platform.

Our engineers have a wealth of experience with Evertz Mediator-X platform deployments and the wider broadcast media industry, meaning they rely on us to always keep the show on air.

Loadbalancer’s intuitive Enterprise Application Delivery Controller (ADC) is also designed to save time and money with a clever, not complex, WebUI.

Easily configure, deploy, manage, and maintain our Enterprise load balancer, reducing complexity and the risk of human error. For a difference you can see in just minutes.

And with WAF and GSLB included straight out-of-the-box, there’s no hidden costs, so the prices you see on our website are fully transparent.

More on what’s possible with Loadbalancer.org.

How to load balance Evertz Mediator-X

The load balancer can be deployed in four fundamental ways: Layer 4 DR mode, Layer 4 NAT mode, Layer 4 SNAT mode, and Layer 7 Reverse Proxy (Layer 7 SNAT mode).

For Evertz Mediator-X, using Layer 4 DR mode is recommended due to its raw throughput and huge scalability. It is also possible to use Layer 7 Reverse Proxy, which allows adding TLS-based encryption for client traffic, however the performance of this set up is not as great as Layer 4 DR mode.

Virtual service (VIP) requirements

To provide load balancing and HA for Evertz Mediator-X, the following VIP is required:

- Mediator-X Global Access

The “Global” virtual service handles Mediator-X user interface traffic and API endpoint traffic. “Global” access to both services is provided using a single virtual service on the load balancer.

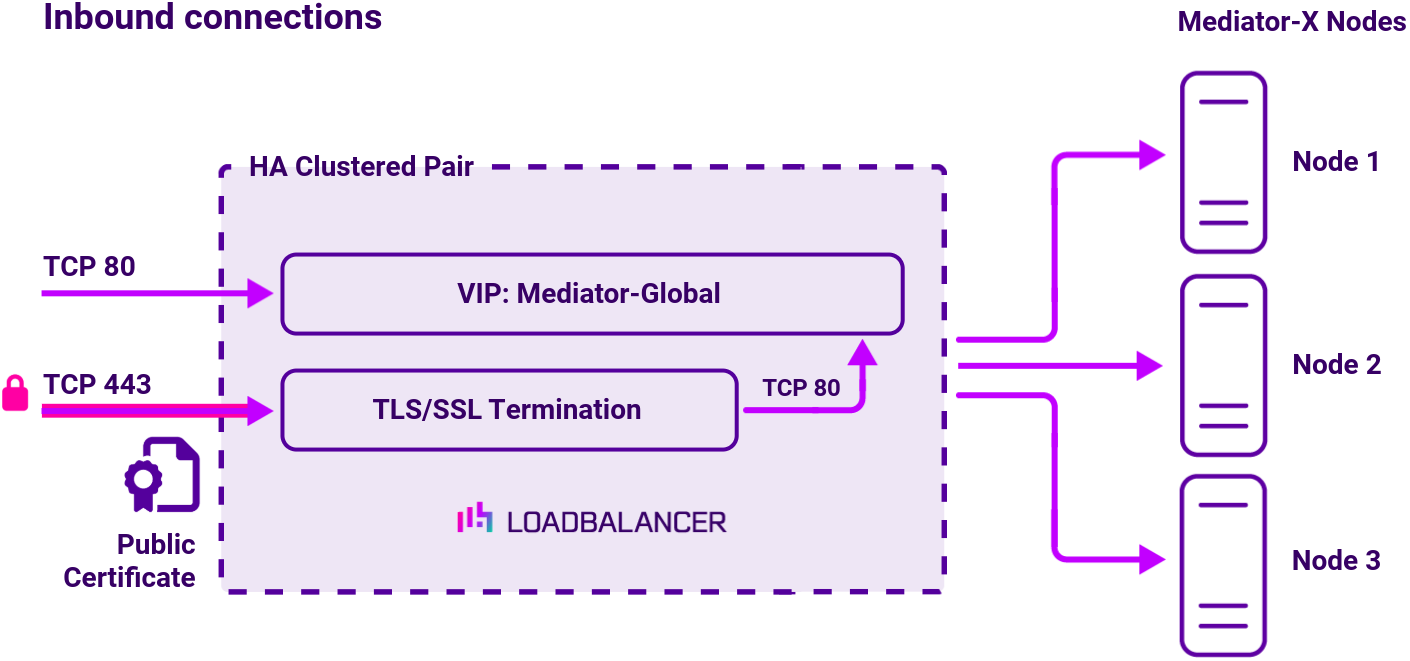

Additionally, a TLS/SSL termination service is required for the scenario that adds TLS based encryption.

Port requirements

| Port | Protocols | Uses |

|---|---|---|

| 80 | TCP/HTTP | Mediator-X user interface access, Mediator-X API endpoint access |

| 443 | TCP/HTTPS | Mediator-X user interface access over TLS (optional) |

TLS/SSL termination

It is possible to configure a TLS/SSL termination service in front of the plaintext, port 80, HTTP based Mediator-Global service. This enables inbound client connections to be secured using TLS. Connections from the load balancer to the Mediator-X servers remain as plaintext HTTP connections (not encrypted) on port 80. In this way, inbound client connections can be secured using encryption without needing to make any changes to the back end Mediator-X servers.

Load balancing deployment concept

Evertz Mediator-X can be load balanced in two different ways:

- Simple deployment: Uses a single virtual service to load balance all of the port 80 traffic used by Mediator-X (the user interface traffic as well as the API endpoint traffic)

- Deployment using TLS-based encryption: An alternative deployment type that should only be used when there is the requirement to secure client connections using TLS-based encryption. Using this deployment type, clients can connect to the Mediator-X User Interface using HTTPS on port 443.

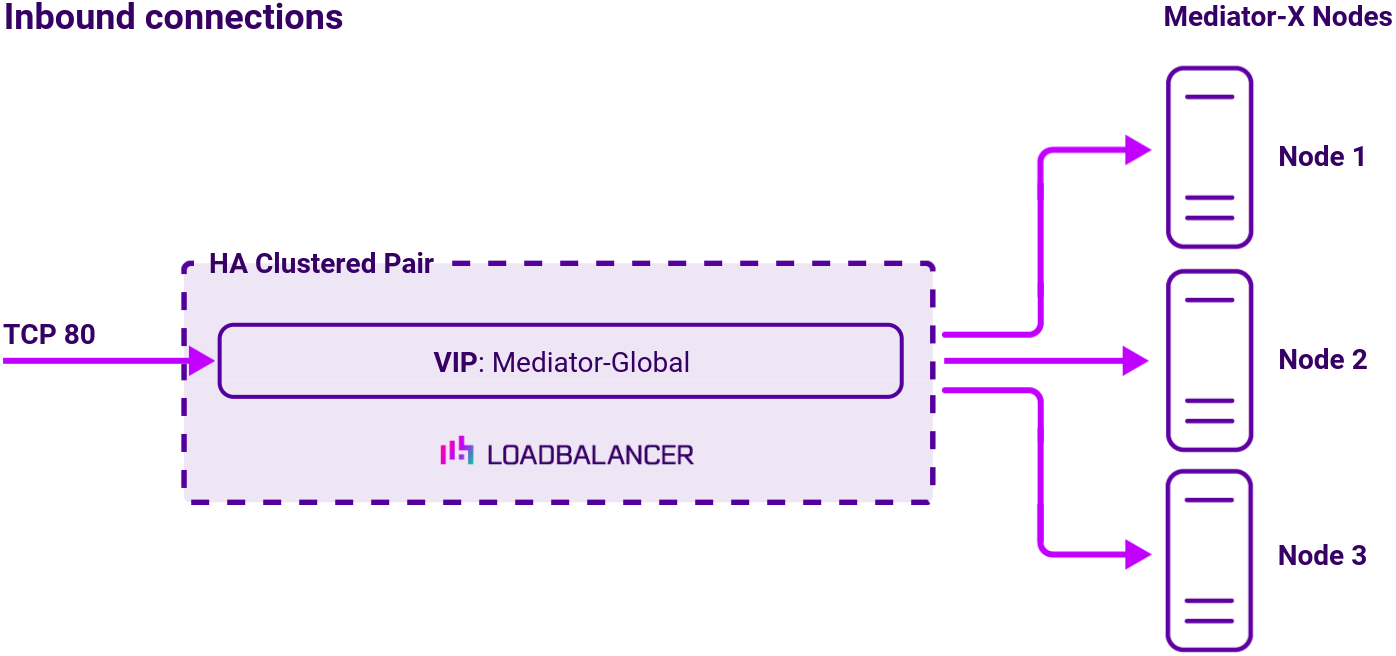

Scenario 1: A simple deployment

Note

The load balancer can be deployed as a single unit, although Loadbalancer.org recommends a clustered pair for resilience & high availability.

In this deployment, a single virtual service is used. The virtual service uses Layer 4 DR mode, offering the greatest possible network speed and scalability.

Layer 4 DR mode is the load balancing method that has traditionally been used with Evertz Mediator deployments.

Scenario 2: Deployment using TLS-based encryption

Note

The load balancer can be deployed as a single unit, although Loadbalancer.org recommends a clustered pair for resilience & high availability.

In this deployment, one virtual service is used in addition to a TLS/SSL termination. The virtual service uses Layer 7 Reverse Proxy. This alternative deployment type allows for Mediator-X traffic to be secured using TLS, with clients sending encrypted traffic on port 443 instead of plaintext traffic on port 80.

Sizing, capacity, and performance for a virtual load balancer deployment

Loadbalancer.org appliances can be deployed as virtual appliances.

- For small deployments handling up to 300 concurrent connections/users, your virtual host should be allocated a minimum of 4 vCPUs, 4 GB of RAM, and 20 GB of disk storage.

- For large deployments handling over 300 concurrent connections/users, your virtual host should be allocated a minimum of 8 vCPUs, 8 GB of RAM, and 20 GB of disk storage.

- For significantly larger deployments, your Evertz representative will give you custom sizing and resource guidelines based on the expected load on your load balancers and your predicted usage profile.

Persistence (aka Server Affinity)

For the Layer 4 DR mode scenario, each virtual service uses source IP address based persistence.

For the layer 7 load balancing scenario (the configuration that adds TLS based encryption), the persistence mode X-Forwarded-For and Source IP is used. This uses X-Forwarded-For HTTP headers as the primary persistence method, with source IP addresses used as a backup persistence method.

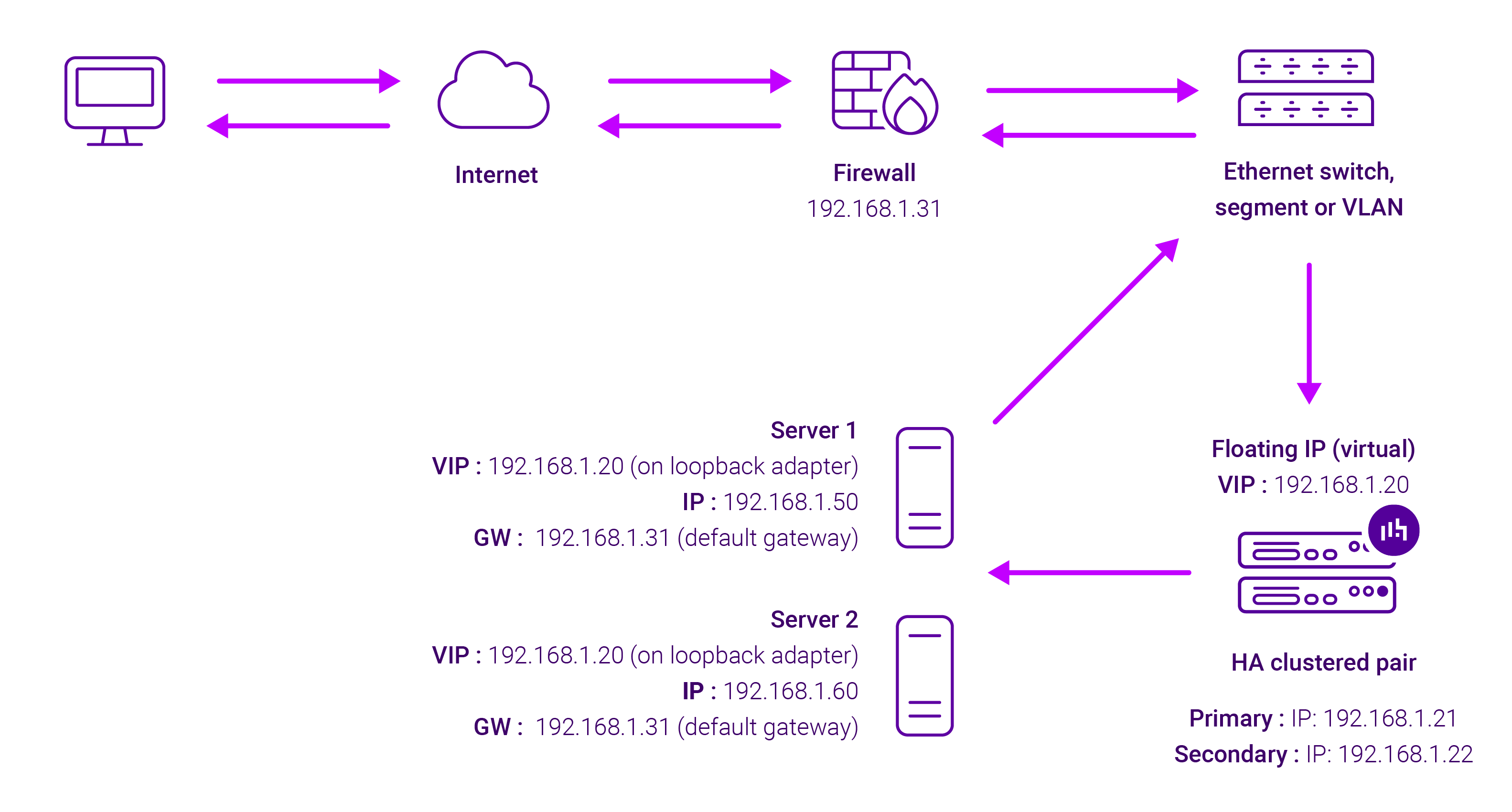

About Layer 4 DR load balancing

Layer 4 DR (Direct Routing) mode is a very high performance solution that requires little change to your existing infrastructure.

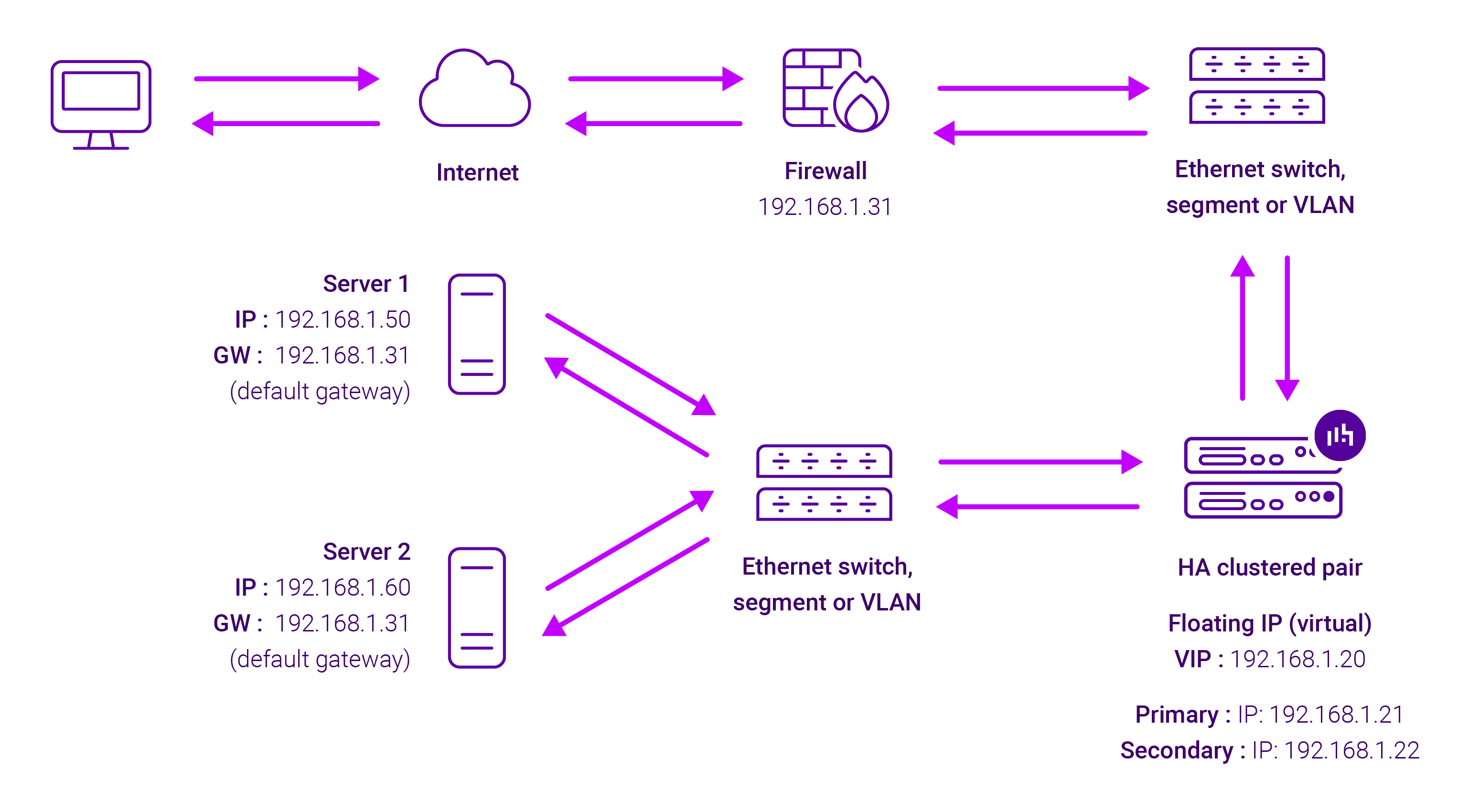

The image below shows an example network diagram for this mode:

DR mode works by changing the destination MAC address of the incoming packet to match the selected Real Server on the fly which is very fast. When the packet reaches the Real Server it expects the Real Server to own the Virtual Services IP address (VIP). This means that you need to ensure that the Real Server (and the load balanced application) respond to both the Real Servers own IP address and the VIP.

The Real Server should not respond to ARP requests for the VIP. Only the load balancer should do this. Configuring the Real Servers in this way is referred to as Solving the ARP Problem.

On average, DR mode is 8 times quicker than NAT for HTTP, 50 times quicker for Terminal Services and much, much faster for streaming media or FTP.

The load balancer must have an Interface in the same subnet as the Real Servers to ensure layer 2 connectivity required for DR mode to work. The VIP can be brought up on the same subnet as the Real Servers, or on a different subnet provided that the load balancer has an interface in that subnet. Port translation is not possible in DR mode i.e. having a different RIP port than the VIP port.

DR mode is transparent, i.e. the Real Server will see the source IP address of the client.

About Layer 7 Reverse Proxy load balancing

ayer 7 Reverse Proxy uses a proxy (HAProxy) at the application layer. Inbound requests are terminated on the load balancer and HAProxy generates a new corresponding request to the chosen Real Server. As a result, Layer 7 is typically not as fast as the Layer 4 methods. Layer 7 is typically chosen when either enhanced options such as SSL termination, cookie based persistence, URL rewriting, header insertion/deletion etc. are required, or when the network topology prohibits the use of the Layer 4 methods.

The image below shows an example Layer 7 Reverse Proxy network diagram:

Because Layer 7 Reverse Proxy is a full proxy, Real Servers in the cluster can be on any accessible network including across the Internet or WAN.

Layer 7 Reverse Proxy is not transparent by default, i.e. the Real Servers will not see the source IP address of the client, they will see the load balancer’s own IP address by default, or any other local appliance IP address if preferred (e.g. the VIP address).

This can be configured per Layer 7 VIP. If required, the load balancer can be configured to provide the actual client IP address to the Real Servers in 2 ways. Either by inserting a header that contains the client’s source IP address, or by modifying the Source Address field of the IP packets and replacing the IP address of the load balancer with the IP address of the client. For more information on these methods, please refer to Transparency at Layer 7 in the Enterprise Admin Manual.