Global Server Load Balancing (GSLB) offers more than just redundancy; it provides a way to implement intelligent, topology-based routing at the DNS level.

Here I look at the technical implementation of GSLB — specifically how to use source-IP recognition and health-aware routing to direct traffic to the optimal node while minimizing network hops.

What you'll learn:

- Global Traffic Steering Mechanics: Understand how GSLB acts as a distributed control plane to reroute traffic across heterogeneous environments (on-prem, private, or public cloud) during local site failures.

- Proximity and Topology-Based Routing: Learn how to implement topography functionality to minimize latency by directing users to the nearest available data center based on source IP and network hops.

- Advanced Health Checking & State Detection: Explore the implementation of TCP, HTTP(S), and custom external health checks to ensure traffic is only directed to nodes capable of handling the current application load.

- Intelligent Load Distribution: Discover how to move beyond simple Round Robin and use real-time metrics—such as global server load—to dynamically balance traffic between high-performing and overloaded regions.

- Multi-Site Architecture Patterns: Review real-world engineering case studies, such as configuring clustered load balancer pairs for local HA combined with GSLB for site-to-site resilience in high-throughput storage clusters.

What is Global Server Load Balancing (GSLB)?

Global Server Load Balancing (GSLB) is a network traffic management strategy that distributes application requests across servers located in multiple geographic data centers or cloud environments. Operating primarily at the DNS level, GSLB acts as a global traffic controller, ensuring high availability and optimized performance by routing users based on server health and proximity.

Key engineering capabilities:

- Disaster recovery (DR): Automatically reroutes traffic to an alternate site if a server, availability zone, or entire data center fails.

- Geolocation routing: Detects a user’s IP address to direct traffic to the nearest data center, significantly reducing latency and "hops."

- Hybrid and multi-cloud support: Seamlessly balances traffic between on-premise infrastructure and private or public clouds.

- Proactive health monitoring: Continuously checks the state of globally distributed nodes to ensure traffic is only sent to 'healthy' endpoints.

How does it work?

Just as a load balancer distributes traffic between connected servers in a single data center, GSLB distributes traffic between connected servers in multiple locations.

If one server, in any location, fails, or if an entire data center becomes unavailable, GSLB reroutes the traffic to another available server somewhere else in the world.

Equally, GSLB can detect users’ locations and automatically route their traffic to the best available server in the nearest data center.

Demand for GSLB has grown significantly in recent years, as large numbers of organizations have migrated away from traditional on-premise systems and have instead created hybrid cloud and hosted environments.

Many have made the strategic decision to split their data resources across multiple locations, to improve business resilience and reduce costs. In all these instances, GSLB allows organizations to deliver a high quality, reliable experience for users, no matter where they are in the world and no matter where their applications and data are located.

What are the benefits of GSLB?

The benefits of GSLB can be broadly grouped into two categories:

Improved resilience and high availability with GSLB

GSLB improves the resilience and availability of key applications, by enabling all user traffic to be switched instantly and seamlessly to an alternative data center in the event of an unexpected outage.

Organizations can use GSLB to constantly monitor application performance at geographically separate locations and ensure the best possible application availability across multiple sites. When routine maintenance is required, organizations can also use GSLB to temporarily direct user traffic to an alternative site, avoiding the need for disruptive downtime.

In the event of an issue or maintenance at one data center, all user traffic is directed seamlessly to the remaining data center, ensuring the high availability of the storage systems.

Improved control over the user experience

GSLB can be used to control which users are directed to which data centers.

Leveraging topology-based routing to optimize the request path, GSLB can ensure users are affinity-mapped to the data center with the lowest RTT (Round Trip Time).

This sophisticated topography enables organizations to easily route user traffic to the nearest server, thereby minimizing unnecessary bandwidth consumption, reducing the distance of the ‘hop’ that user requests have to travel and speeding up server responses.

Organizations can also use GSLB to direct user traffic to specific local data centers to enable them to deliver localized content, relating to the geographic location of the users, or meet country-specific regulatory or security requirements.

GSLB architecture comparisons

Beyond simple redundancy, Global Server Load Balancing (GSLB) serves as a programmable traffic control plane for managing multi-site state synchronization, latency-optimized topology routing, and automated disaster recovery across heterogeneous environments.

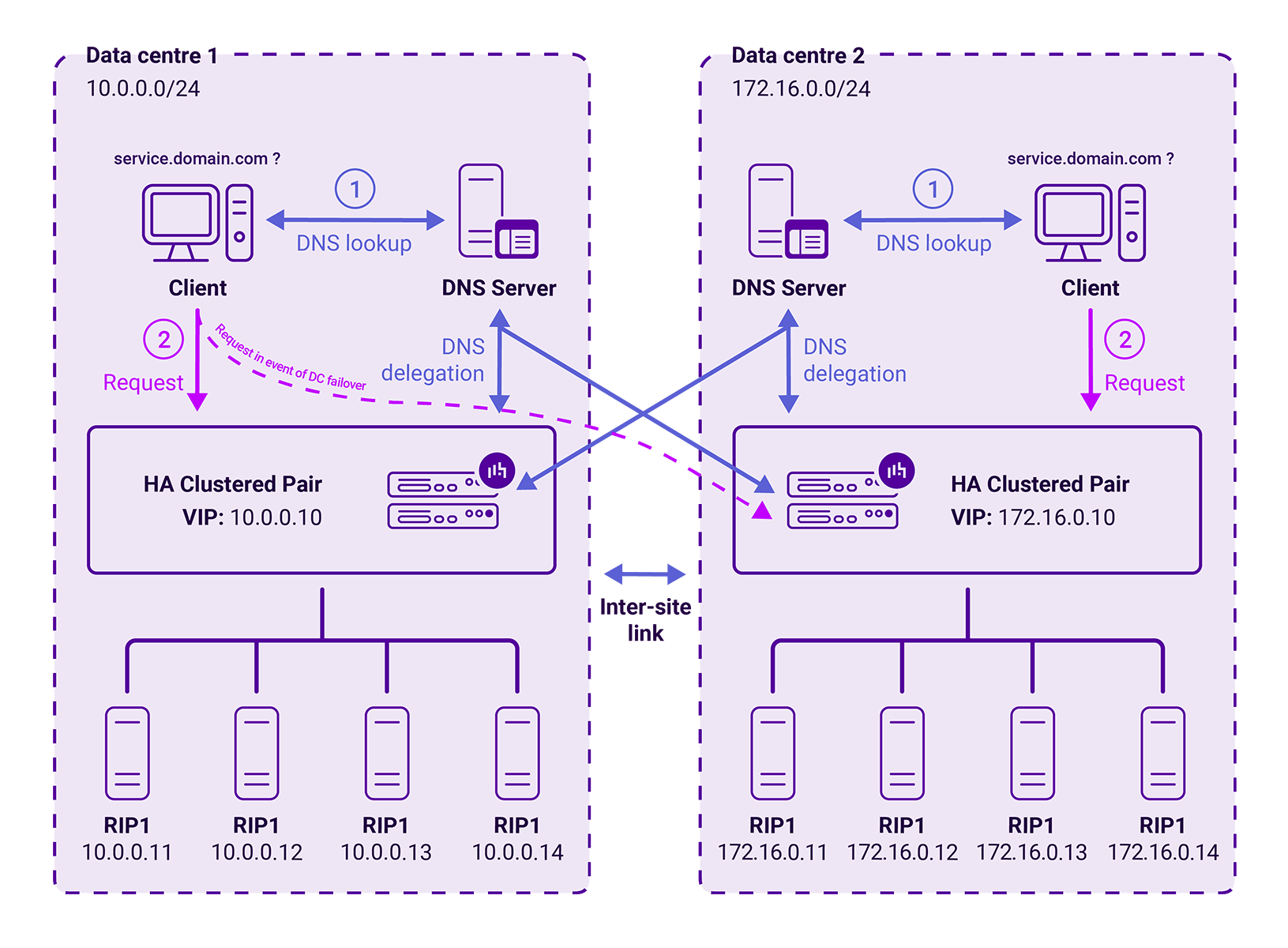

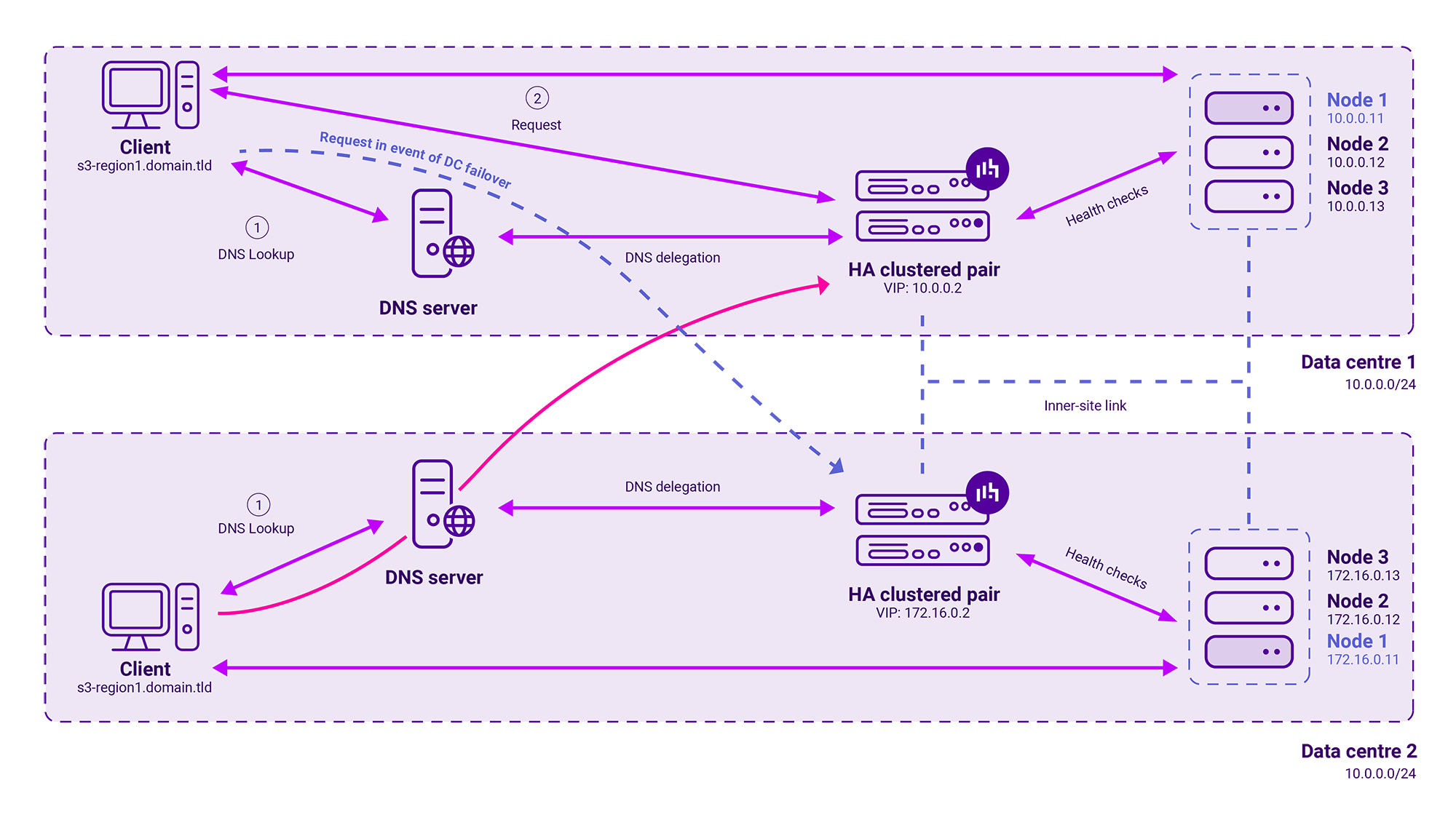

1. GSLB multi-site: Efficiency, performance, or redundancy

Global Server Load Balancing (GSLB) provides traffic distribution across server resources located in multiple locations, for example, multiple data centers or a single disaster recovery (DR) site for redundancy. It passes the traffic straight to the load balancer which does more granular balancing and health checking.

DR site for redundancy example:

Pros 0f GSLB multi-site:

- Flexible health checks to ensure application uptime.

- Topology based routing ensures that internal traffic uses the local data center, avoiding the cost and performance issues of over-using WAN links.

- It can detect users’ locations and automatically route their traffic to the best available server in the nearest data center.

Cons 0f GSLB multi-site:

- Sometimes applications do not support DNS and therefore can’t failover using GSLB (we do however have a solution for that).

Example use case:

- Disaster recovery or multi-site scenarios

More on GSLB benefits, use cases, and configurations.

2. GSLB direct-to-node: Best DNS round-robin alternative

In a typical DNS round-robin deployment, requests are sent out to the configured nodes indiscriminately. However, with GSLB direct-to-node, the real-servers undergo health checks to determine their availability. So if a server responds correctly it is kept within the pool of available nodes for the load balancer to send requests to. And if a server fails to respond correctly it is excluded from the pool of available nodes.

GSLB direct-to-node passes the traffic straight to the application servers removing the need for a load balancer and massively increasing performance. However, in order to do that with enough granularity we recommend using a feedback agent on each server to dynamically change the weight in the GSLB. This is on top of the usual flexible health checks.

Pros of GSLB direct-to-node:

- Best alternative to a typical DNS Round-Robin deployment.

- It can provide an unlimited scale of your services as all request traffic is out of the band/ path of the load balancer, meaning there is no bottleneck.

- At scale is surprisingly granular in balancing large amounts of traffic to large amounts of application servers.

- Can be deployed in combination with topology based routing for site affinity.

- Very low cost, low resource, relatively simple and incredibly fast.

Cons of GSLB direct-to-node:

- Requires careful thought about the sheer number of health checks and feedback agent responses, although this is rarely an issue in production.

- It is out of path, so has less visibility of traffic f lows than a load balancer.

- Limited persistence options, and no SSL offload or WAF.

- You should only use G

- SLB direct-to-node in extremely high throughput environments when your load balancer is no longer able to keep up with throughput.

Example use cases:

- High-volume request workloads such as Veeam backup and recovery.

More on GSLB direct-to-node.

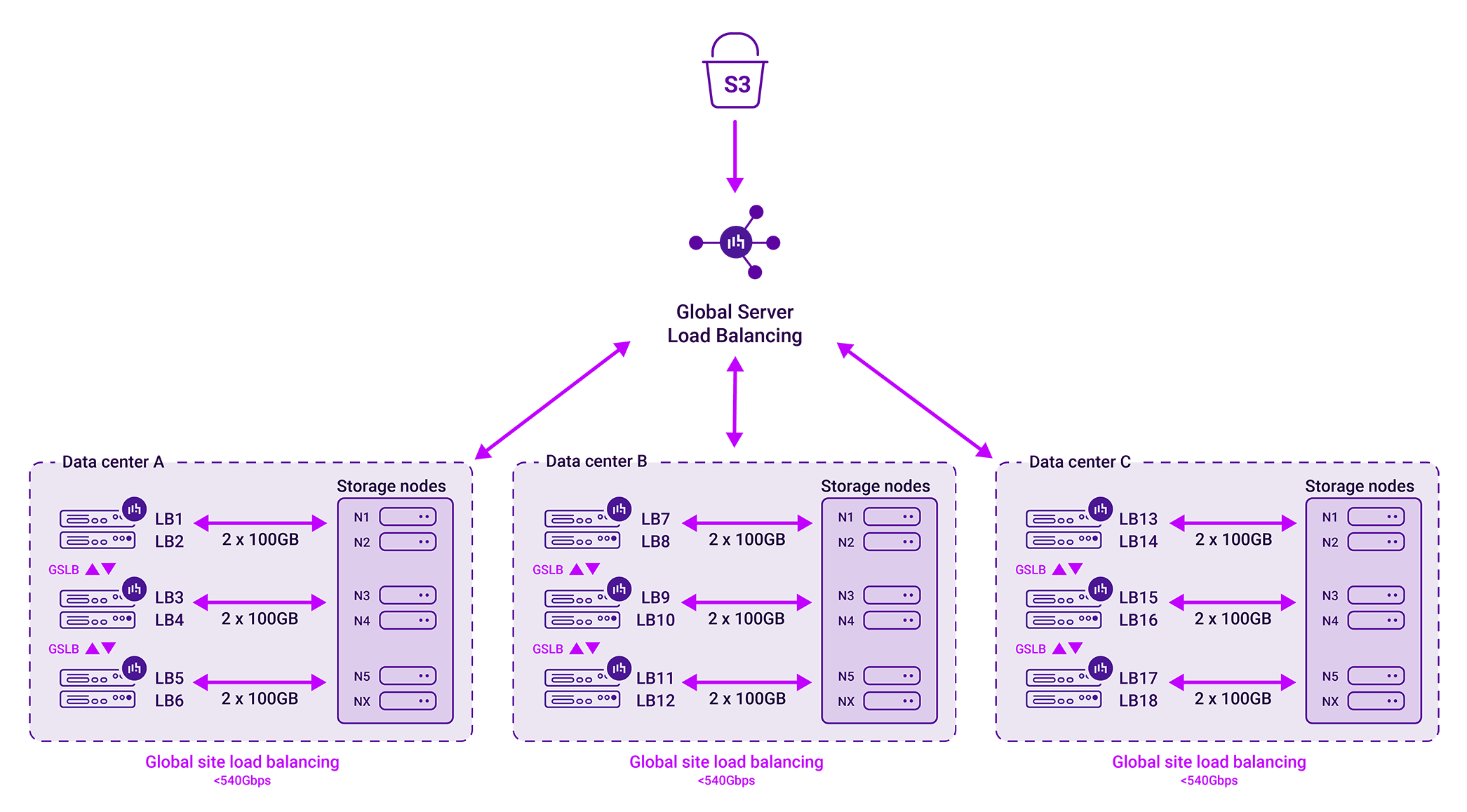

3. GSLB active-active: Horizontal scaling

Active-active GSLB can be used to scale horizontally when there is a requirement to exceed approximately 88 Gbps through the load balancer (and direct-to-node GSLB isn’t suitable). An even distribution across multiple data centers should be considered (if applicable) and redundancy accounted for.

Single site active/active example:

Pros of GSLB active-active:

- A simple method of scaling out for bandwidth (<2.8Tbps).

- An exponential increase in total throughput.

- More cost-effective for large-scale deployments.

- A future-proofed configuration using GSLB.

Cons of GSLB active-active:

- Configuring and managing an active-active GSLB setup is more complex than an active/passive setup.

- If issues arise, troubleshooting can become more complex as you need to consider potential problems with both GSLB devices and the underlying network infrastructure.

Example application:

- High-performance computing (HPC) and advanced AI workloads, including Weka, RedHat, Dremio, and many more.

More on active-active load balancing for N+1 redundancy and > 1Tbps of throughput.

Why Loadbalancer.dk for GSLB?

For those needing enterprise-grade resilience without the enterprise tax or the complexity of vendors like F5 or Citrix, Loadbalancer.dk is a great choice!

While many GSLB solutions focus purely on geography (geo-IP), we differentiate ourselves by prioritizing application-aware locality and simplicity.

1. Smart GSLB vs. traditional geo-IP

Most GSLB solutions simply look at the user's IP and route them to the nearest data center, whereas Loadbalancer uses Smart GSLB, which integrates deeper infrastructure data:

- Infrastructure awareness: Our GSLB doesn't just look at the user; it looks at your internal subnet topology. This ensures local traffic stays local, which is critical for high-bandwidth applications like AI infrastructure or object storage.

- Real-time telemetry: Using a lightweight Feedback Agent (installed on Windows/Linux), our GSLB receives real-time performance data from the actual servers. This prevents the 'hot node' problem where a 'near' server is chosen even if it is currently overwhelmed.

- Zero TTL support: We support a Time-to-Live (TTL) of zero, ensuring DNS results are never cached too long and failovers happen instantly.

2. Radical cost transparency

Another major reason engineers choose us is our licensing model:

- No "add-on" fees: Many vendors (F5, Citrix, Kemp) treat GSLB as a separate module or a "bolt-on" license. With Loadbalancer.dk, GSLB is a free, integral component included in all physical and virtual appliances.

- Unrestricted licensing: We typically offer uncapped throughput and connection limits, meaning your GSLB costs don't spike just because your traffic does.

3. Our 'clever, not complex' philosophy

Our GSLB platform is designed specifically for engineers who need to deploy quickly without a month-long certification course.

- 30-minute setup: We provide a guided graphical interface that simplifies GSLB configuration, which is notoriously one of the most complex tasks in networking.

- Direct-to-node options: For specific high-performance use cases like storage clusters, we provide GSLB direct-to-node. This removes the load balancer from the traffic path entirely after the initial DNS resolution, allowing for unrestricted throughput, limited only by your network hardware.

Loadbalancer v F5/Citrix GSLB at a glance

While Loadbalancer's Enterprise load balancer offers free GSLB, sub-hour setup, and direct Tier 3 support, both F5 BIG-IP and Citrix NetScaler require expensive add-ons and lengthy implementation times to handle their more complex traffic steering:

| Feature | Loadbalancer Enterprise | F5 BIG-IP | Citrix NetScaler |

|---|---|---|---|

| GSLB cost | Included (free!) | Expensive add-on | Expensive add-on |

| Setup time | Less than 1 hour | Days/weeks | Days/weeks |

| Focus | Locality and app-based | Complex traffic steering | Complex traffic steering |

| Support | 24/7 Tier 3 engineers | Tiered/escalation based | Tiered/escalation based |

Summary of GSLB benefits: If you've got it, use it!

If you're already a Loadbalancer.dk customer, Global Server Load Balancing is already included - at no extra cost. So if you've got it, use it!

And harness the benefits of:

- Automated multi-site failover with no manual DNS updates

- Reduced WAN costs by forcing traffic to stay within local subnets when possible, you save significantly on cloud egress and inter-site bandwidth; and

- Data sovereignty: Easily define rules to ensure users stay within specific geographic boundaries to meet regulatory requirements (GDPR/HIPAA).